Fuck AI

2256 readers

199 users here now

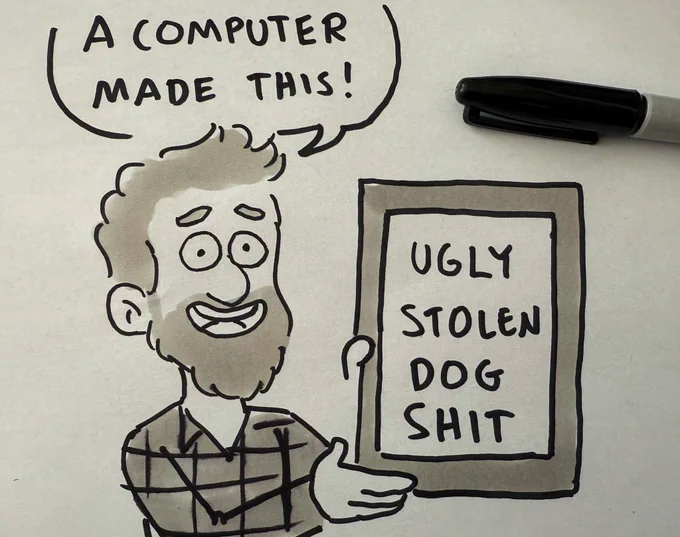

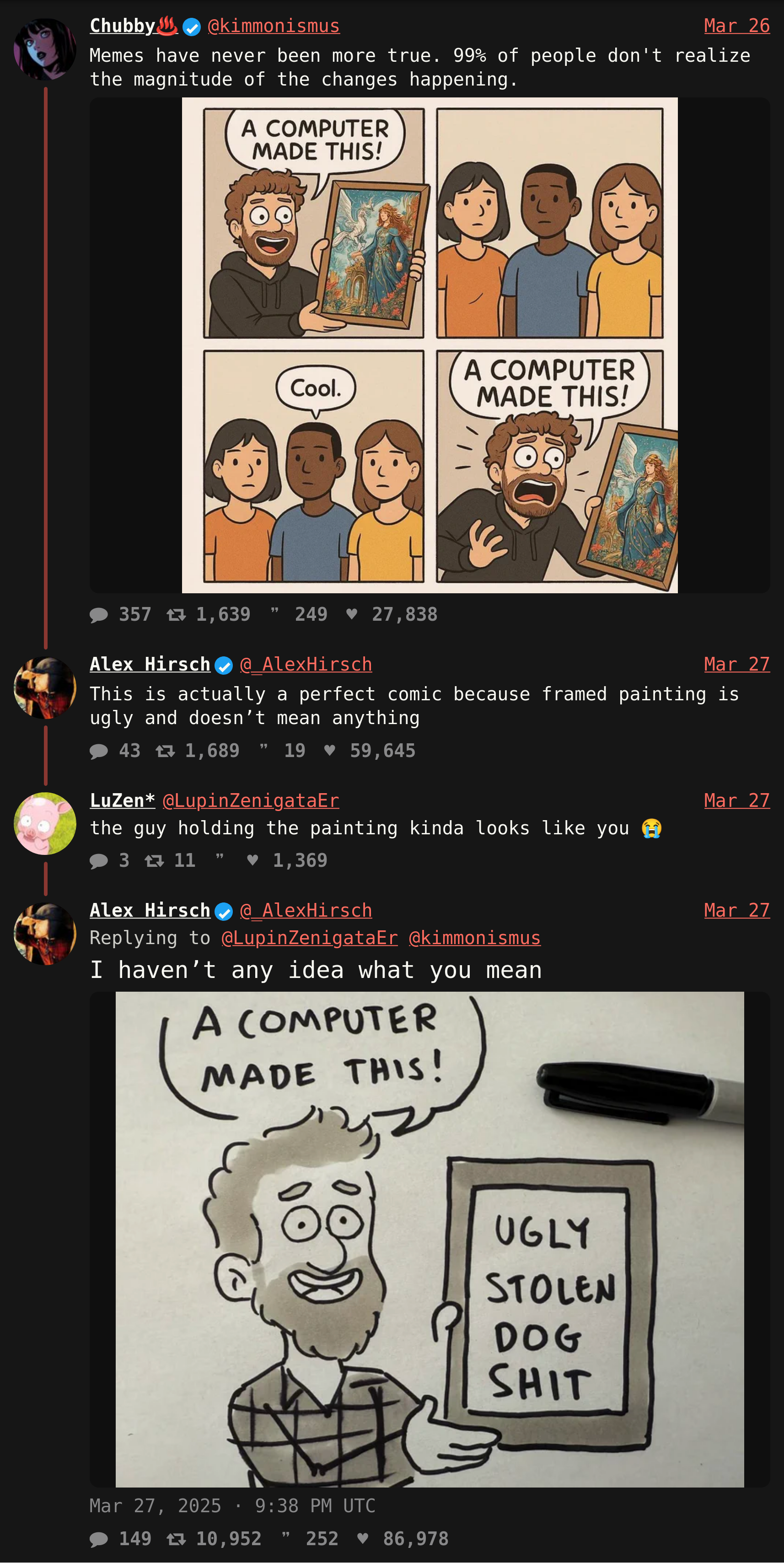

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 1 year ago

MODERATORS

1

2

54

Festival crowd boos at video of conference speakers gushing about how great AI is

(www.businessinsider.com)

3

4

5

6

7

8

9

10

11

9

Citations Needed: A.I. Mysticism as Responsibility-Evasion PR Tactic (Podcast 63mins)

(citationsneeded.libsyn.com)

12

198

Sam Altman’s Studio Ghibli memes are another distraction from OpenAI’s money troubles

(pivot-to-ai.com)

13

14

15

16

17

18

19

20

21

22

23

24

25

30

AI sales startup 11x claims customers it doesn’t have for software that doesn’t work

(pivot-to-ai.com)

view more: next ›