this post was submitted on 05 Jun 2025

564 points (96.1% liked)

Fuck AI

3012 readers

588 users here now

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Kind of different, no? One is visual representation, and the other is impersonation?

They both use copyrighted material, whether directly or indirectly.

They both use copyrighted material yes (and I agree that is bad) but let's work this argument through.

Before we get into this, I'd like to say I personally think AI is an absolute hell on earth which is causing tremendous societal damage. I wish we could un-invent AI and pretend it never happened, and the world would be better for that. But my personal views on AI are not going to factor into this argument.

I feel the argument here, and a view shared by many, is that since the AI was trained unethically, on copyrighted material, then any manner in which that AI is used is equally unethical.

My argument would be that the origin of a tool - be that ethical or unethical, good or evil - does not itself preclude judgment on the individuals later using that tool, for how they choose to use it.

When you ask an AI to generate an image, unless you specify otherwise it will create an amalgam based on its entire training set. The output image, even though it will be derived from work of many artists and photographers, will not by default be directly recognisable as the work of any single person.

When you use an AI to clone someone's voice on the other hand, that doesn't even depend on data held within the model, but is done through you yourself feeding in a bunch of samples as inputs for the model to copy and directing the AI to impersonate that individual directly.

As an end user we don't have any control over how the model was trained, but what we can choose is how that model is used, and to me, that makes a lot of difference.

We can use the tool to generate general things without impersonating anyone in particular, or we can use it to directly target and impersonate specific artists or individuals.

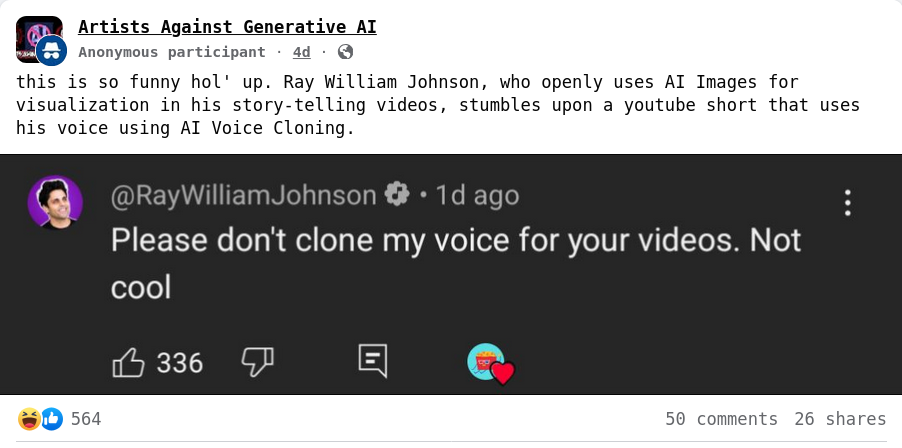

There's certainly plenty of hypocrisy in a person using stolen copyright to generate images, while at the same time complaining of someone doing the same to their voice, but our carthartic schadenfreude at saying "fuck you, you got what's coming" shouldn't mean we don't look objectively at these two activities in terms of their impact.

Fundamentally, generating a generic image versus cloning someone's voice are tremendously different in scope, the directness of who they target, and the level of infringement and harm caused. And so although nobody is innocent here, one activity is still far worse morally than the other - and by a very large amount.

Unless you're generating actual random noise with an AI image generator, it's almost like buying a fence's stolen goods, since it is mainly just copying and merging rather than creating. It's the same thing as piracy, if you do it and then support the crestor no one should mind, but the creator for AI art is everyone it stole from. If I pay for the generation it's also saying to them "please steal more artwork, it is profitable."

The bigger issue is someone who might have commissioned an artist instead uses an AI version of their art because it's close enough to the exact style they wanted, so now their artwork was stolen, and the AIs only source for actual good art is less likely to be in the art business. The photographer or artist whose art they would've used or gotten flak for not sourcing is still stolen in the case of AI generation, but now it's stolen from 200 people so there's no obvious thing to point to besides maybe a style or a palette. If you tell it to replicate an artist's style, it's very obvious that it is recreating parte of images it stole, it just becomes less obvious which parts are stolen as you change the prompt.