How is AI-generated content detected and what is the process for disputing such claims?

Linux

A community for everything relating to the GNU/Linux operating system (except the memes!)

Also, check out:

Original icon base courtesy of lewing@isc.tamu.edu and The GIMP

Just an example:

I'm a programming student. In one of my classes we had a simple assignment. Write a simple script to calculate factorials. The purpose of this assignment was to teach recursion. Should be doable in 4-5 lines max, probably less. My coed decided to vibe code his assignment and ended up with a 55 line script. It worked, but it was literally %1100 of the length it needed to be with lots of dead functions and 'None->None(None)' style explicit typing where it just simply wasn't needed.

The code was hilariously obviously AI code.

Edit: I had like 3/4 typos here

Not all AI code is so obvious though. Especially if you give it detailed instructions on what to do exactly. It could be very hard to tell in some cases

if it's not clear if it's ai, it's not the code this policy was targeting. this is so they don't have to waste time justifying removing the true ai slop.

if the code looks bad enough to be indistinguishable from ai slop, I don't think it matters that it was handwritten or not.

I guess the practical idea is that if your AI generated code is so good and you've reviewed it so well that it fools the reviewer, the rule did it's job and then it doesn't matter.

But most of the time the AI code jumps out immediately to any experienced reviewer, and usually for bad reasons.

So then it's not really a blanket "no-AI" rule if it can't be enforceable if it's good enough? I suppose the rule should have been "no obviously bad AI" or some other equally subjective thing?

extension developers should be able to justify and explain the code they submit, within reason

I think this is the meat of how the policy will work. People can use AI or not. Nobody is going to know. But if someone slops in a giant submission and can’t explain why any of the code exists, it needs to go in the garbage.

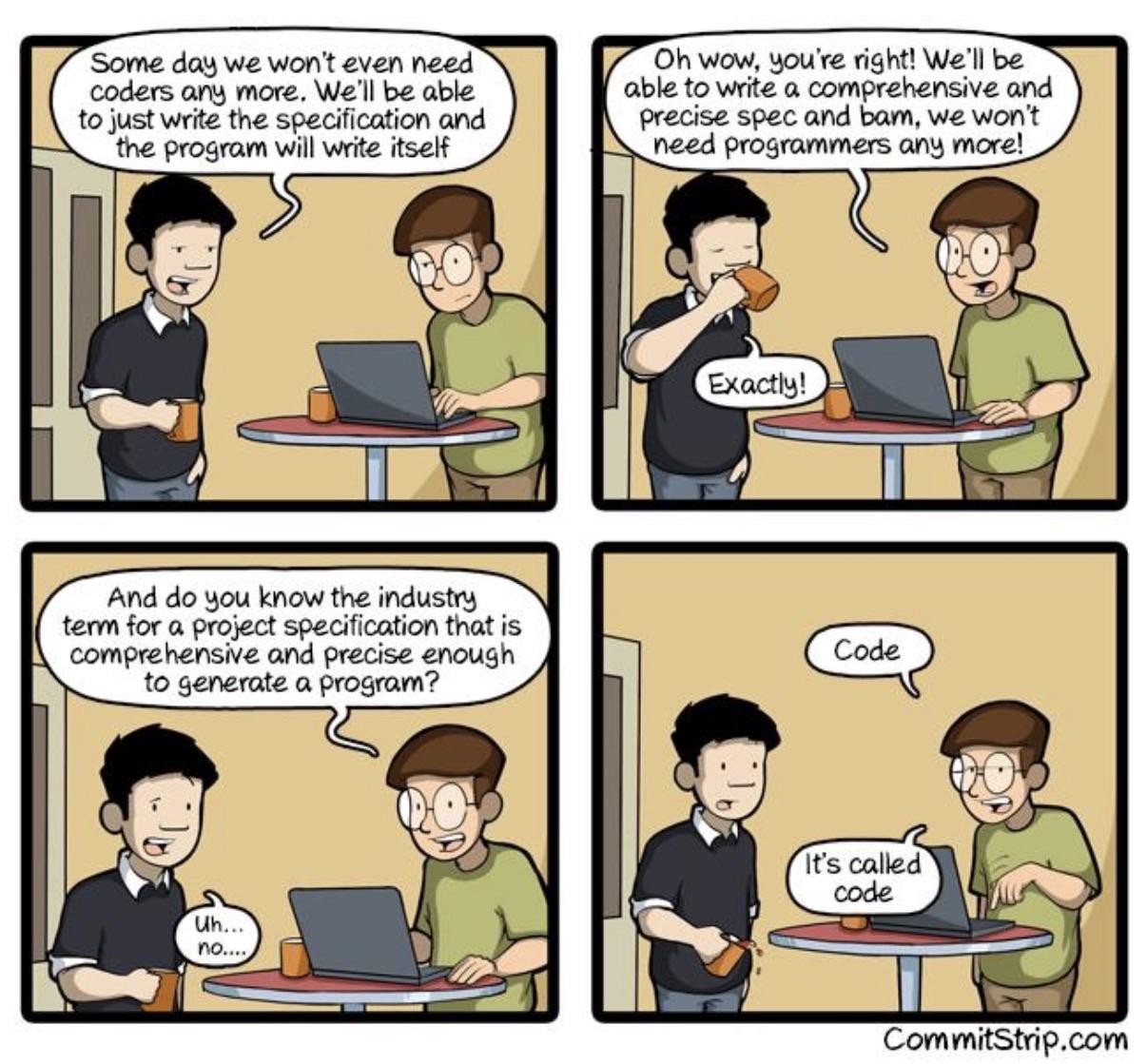

Too many people think because something finally “works”, it’s good. Once your AI has written code that seems to work, that’s supposed to be when the human starts their work. You’re not done. You’re not almost done. You have a working prototype that you now need to turn into something of value.

Too many people think because something finally “works”, it’s good. Once your AI has written code that seems to work, that’s supposed to be when the human starts their work.

Holy shit, preach!

Once you give a shit ton of prompts and the feature finally starts working, the code is most likely complete ass, probably filled with a ton of useless leftovers from previous iterations, redundant and unoptimized code. That's when you start reading/understanding the code and polishing it, not when you ship it lol

Just the fact that people are actually trying to regulate it instead of "too nuanced, I will fix it tomorrow" makes me haply.

But they are also doing it pretty reasonably too. I like this.

You know, GNOME does some stupid stuff, but I can respect them for this.

Good.

I'm mostly switched off SAMMI because their current head dev is all in on AI bullshit. Got maybe one thing left to move to streamerbot and I'm clear there. My two regular viewers wont notice at all but I'll feel better about it.

You used to be able to tell an image was photoshopped because of the pixels. Now with code you can tell it was written with AI because of the comments.

Rare, so needed Gnome W