Thanks for the info. I will look into it.

I've also experienced it for a long time now. I'm not exactly sure what causes this, but I'll try to look into it again.

Yes, it is fixed in the nightly builds and will be included in the next release.

I think this issue is fixed in this release.

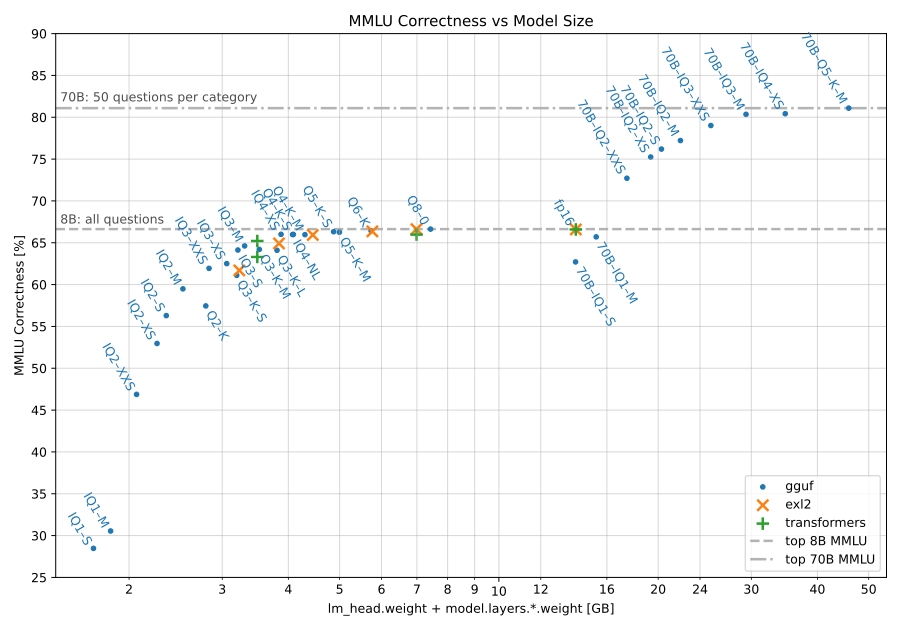

From what I've seen, it's definitely worth quantizing. I've used llama 3 8B (fp16) and llama 3 70B (q2_XS). The 70B version was way better, even with this quantization and it fits perfectly in 24 GB of VRAM. There's also this comparison showing the quantization option and their benchmark scores:

To run this particular model though, you would need about 45GB of RAM just for the q2_K quant according to Ollama. I think I could run this with my GPU and offload the rest of the layers to the CPU, but the performance wouldn't be that great(e.g. less than 1 t/s).

Are you using mistral 7B?

I also really like that model and their fine-tunes. If licensing is a concern, it's definitely a great choice.

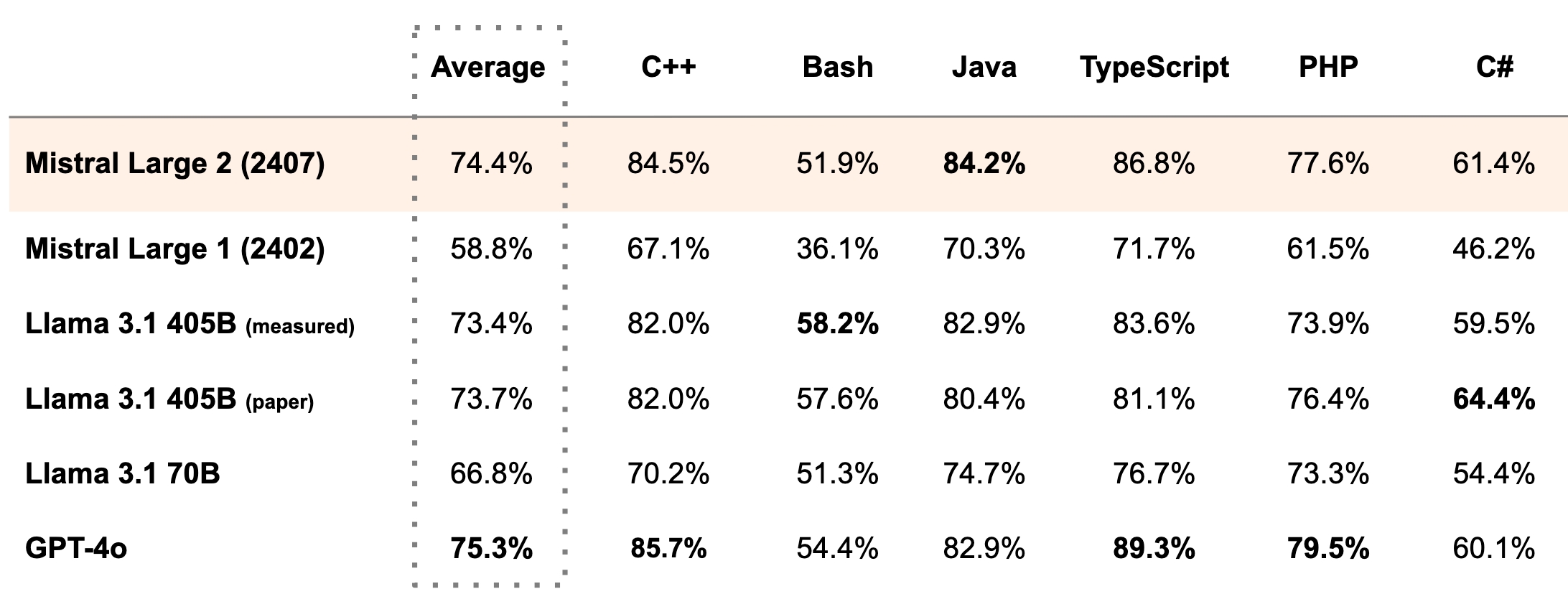

Mistral also has a new model, Mistral Nemo. I haven't tried it myself, but I heard it's quite good. It's also licensed under Apache 2.0 as far as I know.

Currently, I only have a free account there. I tried Hydroxide first, and I had no problem logging in. I was also able to fetch some emails. I will try hydroxide-push as well later.

I haven't heard of Hydroxide before; thank you for highlighting it! Just one question: Does it also require a premium account like the official bridge, or is it also available for free accounts?

I still intend to work on it, but I can only do it in my free time....and I don’t have much of that right now.