The majority of "AI Experts" online that I've seen are business majors.

Then a ton of junior/mid software engineers who have use the OpenAI API.

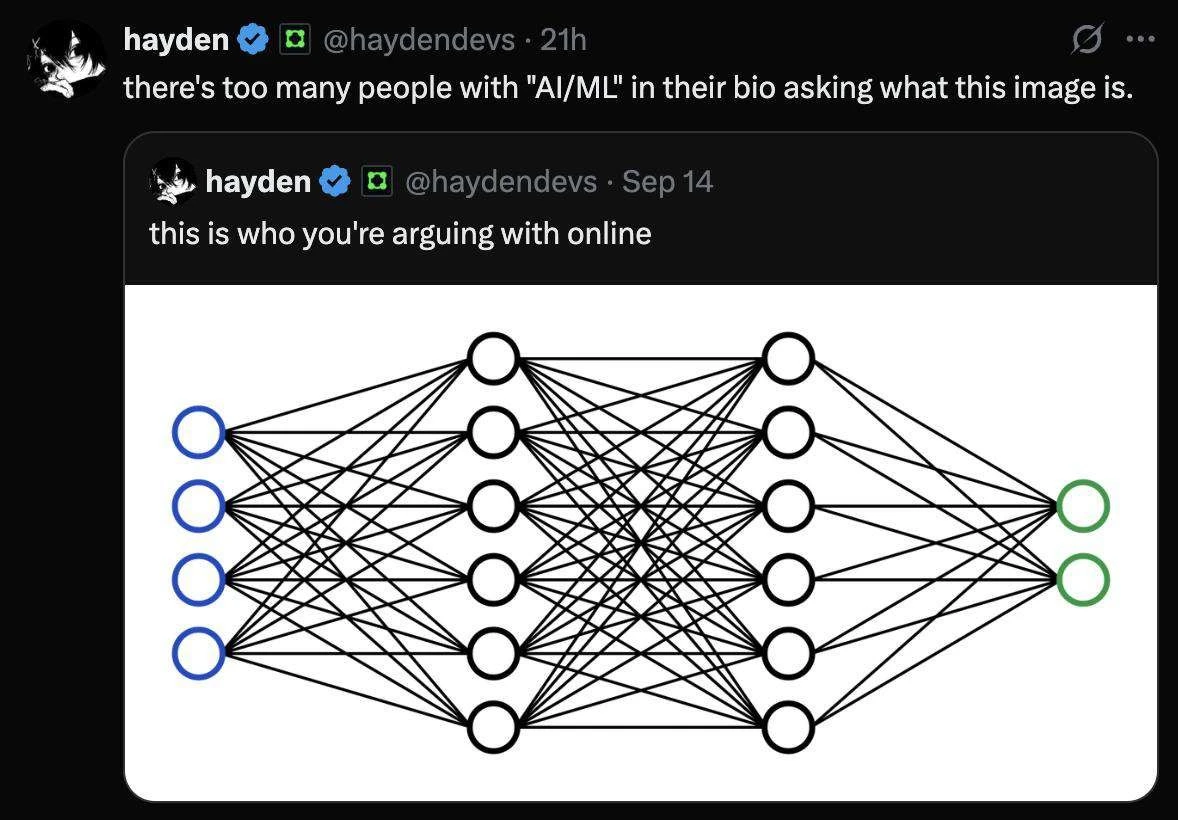

Finally are the very very few technical people who have interacted with models directly, maybe even trained some models. Coded directly against them. And even then I don't think many of them truly understand what's going on in there.

Hell, I've been training models and using ML directly for a decade and I barely know what's going on in there. Don't worry I get the image, just calling out how frighteningly few actually understand it, yet so many swear they know AI super well