this post was submitted on 21 Dec 2025

58 points (100.0% liked)

Climate - truthful information about climate, related activism and politics.

7727 readers

334 users here now

Discussion of climate, how it is changing, activism around that, the politics, and the energy systems change we need in order to stabilize things.

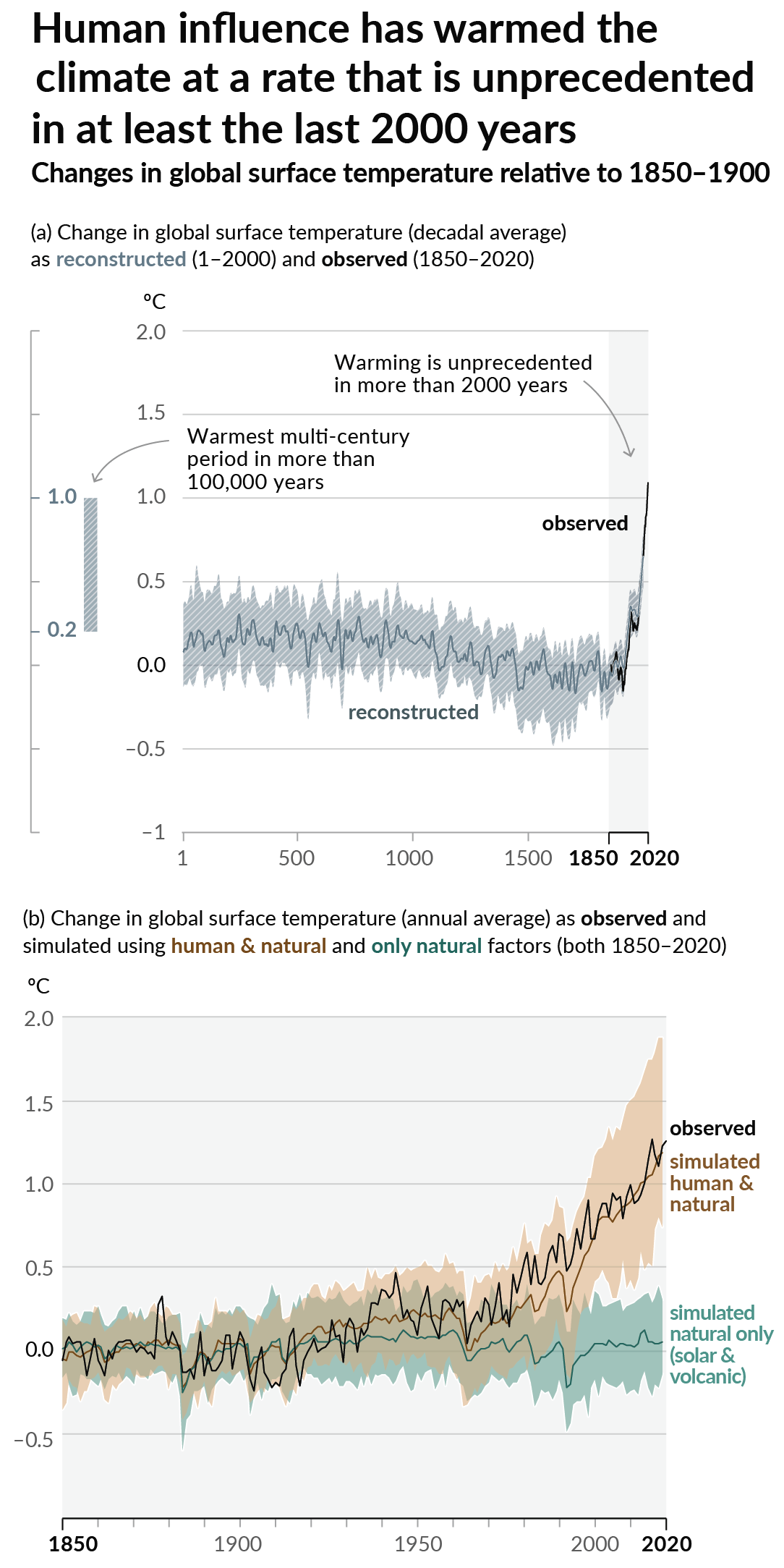

As a starting point, the burning of fossil fuels, and to a lesser extent deforestation and release of methane are responsible for the warming in recent decades:

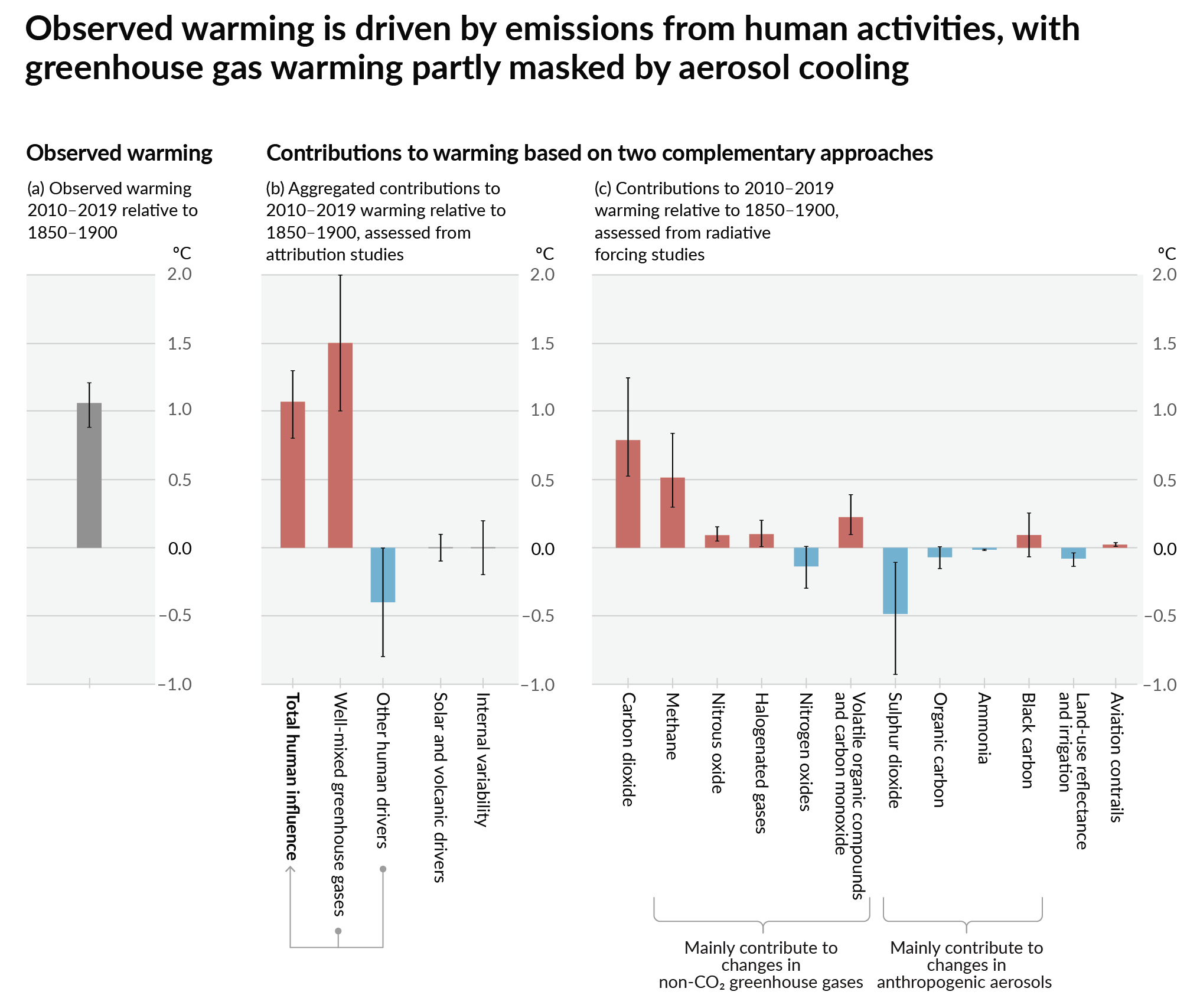

How much each change to the atmosphere has warmed the world:

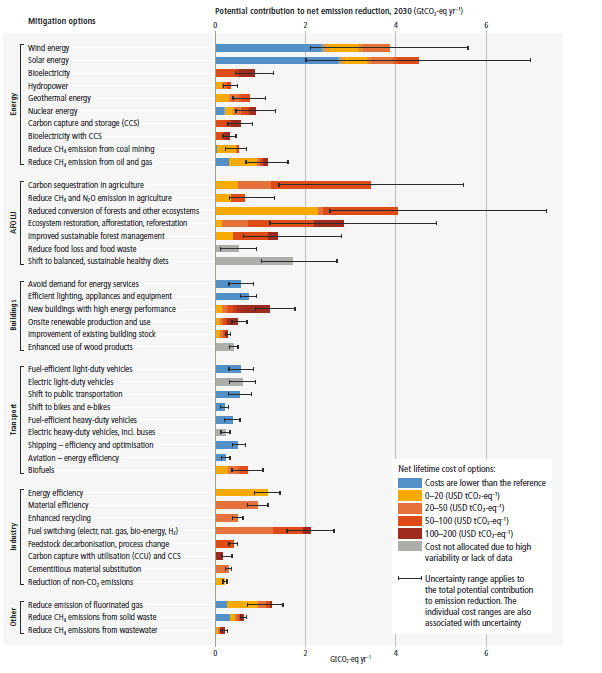

Recommended actions to cut greenhouse gas emissions in the near future:

Anti-science, inactivism, and unsupported conspiracy theories are not ok here.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Chatbots have a built-in tendency for sycophancy - to affirm the user and sound supportive, at the cost of remaining truthful.

ChatGPT went through its sycophancy scandal recently and I would have hoped they'd have added weight to finding credible and factual sources, but apparently they haven't.

To be honest, I'm rather surprised that Meta AI didn't exhibit much sycophancy. Perhaps they're simply somewhat behind the others in their customization curve - an language model can't be sycophant if it can't figure out the biases of its user or remember them until the relevant prompt.

Grok, being a creation of a company owned by Elon Musk, has quite predictably been "softened up" the most - to cater to the remaining user base of Twitter. I would expect the ability of Grok to present an unbiased and factual opinion degrade further in the future.

Overall, my rather limited personal experience with LLMs suggests that most language models will happily lie to you, unless you ask very carefully. They're only language models, not reality models after all.