this post was submitted on 17 Nov 2025

1054 points (99.0% liked)

memes

18061 readers

1963 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Because why bother saying anything if you aren't going to say anything? Offering correct information gives the other person a chance to correct and improve. Just saying 'WRONG!' is just a slap in the face that only serves to let you feel superior, masturbatory pretense.

As for the rest, those are all clearly issues, but none of them are of a sort where handling the one I raised and handling them are mutually exclusive. And at least the second item is actually a following point from the one I mentioned. People being tricked into thinking LLMs are capable of thought contributes to the thought by decision-makers that people can simply be replaced. Viewing the systems as intelligent is a big part of what makes people trust them enough to blindly accept biases in the results. Ideally, I'd say AI should be kept purely in the realm of research until it's developed enough for isolated use as a tool but good luck getting that to happen. Post hoc adjustments are probably the best we can hope for and my little suggestion is a fun way to at least try to mitigate some of the effects. It's certainly more reasonably likely to address some element of the issues than just saying 'WRONG!'

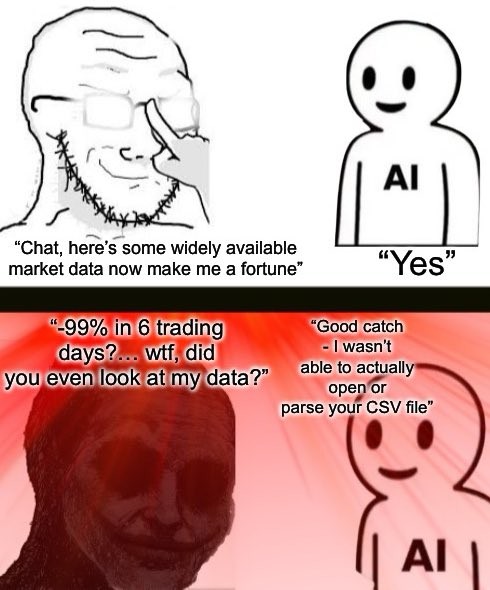

The fun part is, while the issues you mentioned all have the possibility of creating broad, hard to define harm if left unchecked, there are already examples of direct harm coming from people treating LLM outputs as meaningful.